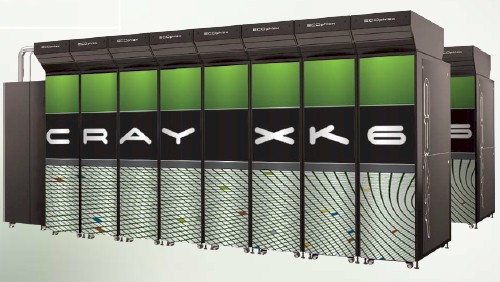

Supercomputer maker Cray has finally jumped on the GPU coprocessor bandwagon, and it looks like someone is going to have to hitch Belgian draft horses to that wagon and reinforce its axles once the XK6 hybrid super starts shipping in the fall.

Cray made a name for itself as a provider of vector processors back in the 1970s and morphed into a maker of massively parallel x64 machines with proprietary interconnects in the 1990s. With GPUs – whether they are made by Nvidia or AMD – being more like vector engines than not, the adoption of a GPU as a coprocessor is a return to its past. Or more precisely, considering that Cray is really Tera Computer plus Cray Research plus Octiga Bay, one of its pasts.

Cray didn't need GPUs to break through the petaflops barrier, but it is going to need GPUs or some kind of coprocessor to break through the exascale barrier. Barry Bolding, vice president of products at Cray, tells El Reg that "customers are a little dissatisfied that scalar performance has flattened out" in recent years, referring to the clock speeds of the x64 processors used inside of generic supercomputer clusters (usually linked by Ethernet or InfiniBand networks) or the monster machines created by Cray and Silicon Graphics using their respective "Gemini" XE and "UltraViolet" NUMAlink 5 interconnects.

"Cray has been the first to the GPU party, but we have a very good understanding of petascale applications," Bolding boasted, adding that "putting together a box that has both CPUs and GPUs is the easy part."

In fact, says Bolding, Cray has spent more money on integrating its software stack – a custom Linux environment, its Ethernet emulation layer for the Gemini interconnect, and various development tools for parallel environments – with GPUs than it has spent redesigning the blade servers at the heart of its "Baker" family of XE6 and XE6m machines so they can adopt GPU coprocessors.

"We think that our vector experience helps," Bolding says. "The codes that were good for vectors will generally perform well on GPUs. And we really do view this as a stepping stone to exascale. GPUs are today the most effective accelerator that is available." Bolding added that Cray's future designs will not be locked into either AMD's HyperTransport or Intel's QuickPath interconnects, but rather will hang accelerators off PCI Express links. (Very soon, PCI Express 3.0 links, but not this time around.)

The Cray XE6 machines are based on eight-socket blade servers, complete with main memory and two Gemini interconnect ASICs. The prior generation of machines, the XT6 supers, were based on the same "Magny-Cours" Opteron 6100 processors that are used in the XE6 blades, but used the much slower and less scalable SeaStar2+ interconnect. The SeaStar2+ interconnect is the great-grandson of the "Red Storm" interconnect that Cray developed for Sandia National Laboratory, delivered in 2003, and later commercialized as the XT3.

The Cray XE6 machines are based on eight-socket blade servers, complete with main memory and two Gemini interconnect ASICs. The prior generation of machines, the XT6 supers, were based on the same "Magny-Cours" Opteron 6100 processors that are used in the XE6 blades, but used the much slower and less scalable SeaStar2+ interconnect. The SeaStar2+ interconnect is the great-grandson of the "Red Storm" interconnect that Cray developed for Sandia National Laboratory, delivered in 2003, and later commercialized as the XT3.

Not waiting on Kepler

As El Reg previously reported, Oak Ridge National Laboratory – one of the US Department of Energy's big nuke/supercomputing labs in Tennessee – had already let slip two months ago that it was building a whopping 20-petaflops machine, called Titan and using a hybrid CPU-GPU design, that would start rolling into its data center later this year with full operation in 2012.

While neither Cray nor Oak Ridge have confirmed this, it is reasonable to presume that Titan is actually an XK6 super. It could also be a variant of it, perhaps with a higher ratio of GPUs to CPUs, which Bolding says will be available through Cray's Custom Engineering unit.

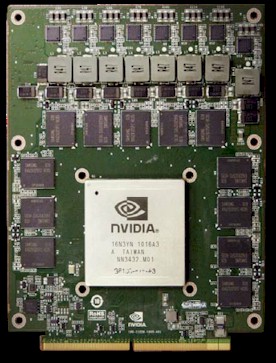

Nvidia's Tesla X2090 GPU coprocessor

The goal with the XK6 ceepie-geepie blades was to keep the XK6 in the same thermal envelope as the XE6. If Cray could do that, then it would not have to do anything special in terms of packaging or cooling to use the GPUs. Cray met that design goal, and you can pull out an XE6 blade and slide in an XK6 blade and nothing is going to melt, even though the X2090 GPU is rated at 225 watts peak. Companies can mix and match XE6 and XK6 blades in a single system, and Bolding says that many customers will do that so they can support different workloads.

The cabinets used in the XE6 and XK6 supercomputers can house up to 24 blades and have a peak-power rating of over 50 kilowatts as their design point, according to Bolding. That, however, is not how much power they will draw in the field – that will depend on how the applications hit the CPUs and GPUs, of course.

The fact that Cray is waiting for the Opteron 6200 processors for the XK6 and not just shipping this box right now with the current Opteron 6100s suggests that Nvidia is not actually ready to ship the Tesla X2090 GPU in the volumes that Cray needs. (Technically speaking, it has not even been announced yet.) It also suggests that while Cray has confidence in AMD's delivery of the Opteron 6200 processors, the future "Kepler" GPU expected by the end of this year is not going to be ready for whatever time Cray wanted to put it into the XK6 super.

Cray cannot, of course, give out any peak performance ratings on the Opteron 6200 side of the card, but each of the Tesla X2090 GPU cards is rated at 665 gigaflops doing double-precision floating-point operations. Each blade has four GPUs and there are 24 in a cabinet, so that gets you to 63.8 teraflops per cabinet just on the GPU side of the XK6 ceepie-geepie.

The current XE6 blades using the 12-core Opteron 2100s are rated at around 90 to 100 gigaflops per socket, according to Bolding. Add four cores and goose the clock speed a bit on the chips and you might be able to push it up to 160 gigaflops – or at least that is El Reg's guess. That works out to 640 gigaflops of CPU number-crunching power for four Interlagos sockets, which is pretty good compared to the 800 gigaflops that the all-Opteron, eight-socket XE6 blade can deliver.

Add it up, that's somewhere around 3.5 teraflops per XK6 blade of peak floating-point oomph, or something on the order of 83 teraflops per cabinet. With 200 cabinets – roughly the size of the "Jaguar" machine down at Oak Ridge – you are talking about something north of 16.6 petaflops, or nearly an order of magnitude better performance.

With the 3D torus of the Gemini interconnect, Cray can build out as far as 300 cabinets, so a ceepie-geepie super could hit around 25 petaflops. Replace the Tesla X2090s with some Nvidia Kepler equivalents, and a 300-cabinet XK6 super could deliver something on the order of 5.8 petaflops on the Opteron side and 29 petaflops on the Tesla side. You only need something like 15 megawatts of power for that behemoth XK6 supercomputer alone, and maybe 25 megawatts for the whole data center.

The launch customer for the XK6 ceepie-geepie super is not going to be Oak Ridge, by the way, but instead the Swiss National Supercomputing Centre, which is also taking delivery of the first XMT-2 massively multithreaded super, based on the Gemini interconnect and Cray's future ThreadStorm-2 processor.

One more thing: in case you are wondering, the new Cray box is called the XK6 because when they looked at XG6, the font for the G and the 6 looked weird next to each other. ®